What an exciting time to be a Network Engineer! As technology grows at a rapid pace, our industry is demanding our skill sets grow along with it as well, at the same speed. The problem is, there is SO much going on, it can be overwhelming. The traditional job of a Network Engineer as we know it, is switching gears and beginning to intertwine with the DevOps world. In a world where we need things done “Like Yesterday!”, developer tools such as Docker are becoming more important than ever in our line of work, not only to save us time but they can make a huge impact in how we efficiently manage our Networks.

What is Docker?

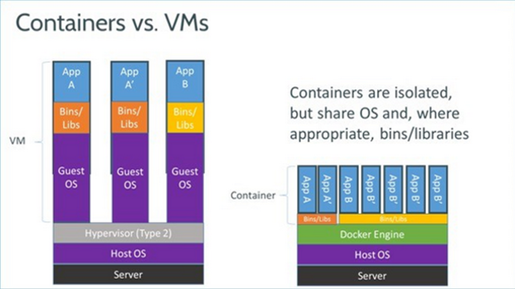

Originally built for Linux, Docker is an open source platform widely used by App Developers to build applications. It virtualizes an Operating System, in comparison to a Virtual Machine which virtualizes hardware. This tool allows a developer to automate the deployment of applications in individual Containers so that they can be moved around and run in any environment reliably, largely due to its portability, light weight, and speed. This eliminates the issue of the application working on the developer’s laptop and not working on the tester’s machine. I know, I know, Containers are nothing new, but many debate on whether tools such as Docker are replacements for Virtual Machines especially in this day and age when Automation is huge in I.T. At the end of the day, it ultimately comes down to the use case, because like any other technology, they both have their pro’s and cons. Join me as we take a quick, high level glimpse at what makes Docker so special!

Dockerfile:

Every container we create begins with a Dockerfile. The Dockerfile is essentially a blueprint of what the container will consist of, this being the Operating system, coding language, commands, network ports and so on. Most importantly, it tells us what the purpose of the container is and what the container will do once we are ready to run it in production.

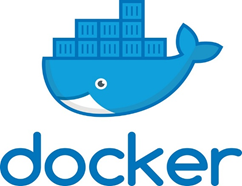

Docker Hub:

Docker’s specialty is running instances of images, that’s what containers are. No need to worry about building your image from scratch if you don’t want to, thanks to Docker Hub. This hub is an online repository that has thousands of pre-built images, instances of certain Operating systems ready to be spun up, customized images, and you can even create your own and post it on there for others to use. After installing Docker, accessing this hub is as easy as navigating to www.dockerhub.com, finding the image your heart desires, and clicking download!

Containers:

When we talk Docker, we talk Containers. A Container is an image, and it includes everything an application needs to function as intended. It is a completely isolated environment that contains its own Operating system, CPU, Memory, and even its own Network. The main purpose of Container’s is to allow a developer to build an application, package it all into one container that includes all the dependencies needed for the app to function, and be able to deploy the app anywhere. A huge deal breaker between a Docker Container and a VM is speed. Where a VM will take minutes to boot up, a Container boots up in a matter of seconds! Why is that? Well…if you are anything like myself, you like visuals, so lets take peek at the image below and I will explain.

By Steven J. Vaughan-Nichols for Linux and Open Source | March 21, 2018 -- 12:50 GMT (05:50 PDT) | Topic: Cloud. https://www.zdnet.com/article/what-is-docker-and-why-is-it-so-darn-popular/

In the image above, we have a VM on the left and on the right, a Docker Container. A VM consists of a host OS, a Hypervisor, guest OS’s, plus the applications and dependencies. What this also means is that each Guest OS has its own Kernel. Every time we add and boot up a VM, we need to wait for each individual OS to boot up completely. Not only does this cost us precious time but as you can imagine, it also causes a lot of overhead and resource utilization. With Docker, we install one host OS on our server, Ubuntu for example, that is shared amongst all containers, which means one kernel. The containers share portions of the Host OS and its Kernel, but otherwise run as individual instances from one another. A Container boots up in seconds when adding a new Container because the Kernel of the underlying Ubuntu OS, is already up and running. This is the reason why Docker is super Lightweight and fast in comparison to Virtual Machines.

Docker Engine:

The heart and soul of Docker is the Docker Engine, also known as the Daemon. As you can see in the image above, this engine eliminates the need for a Hypervisor. The Daemon is simply a process that runs on top of the host OS and is responsible for creating, running, and managing our containers. The engine is where the magic happens because it allows us to virtualize portions of our Linux based Host OS, Ubuntu in this case, and install Linux based Operating systems in each of our individual containers (CentOS, Debian, etc).

Portability:

This is a HUGE feature and reason why developers choose Docker as their go to software when building applications. Since containers are not tied to the Host OS or the underlying environment, you don’t have to worry about building an application and moving it to a different device only to find out it doesn’t work. Containers are isolated and secure environments, as long as the target device has Docker installed, your golden! What you CAN’T have, is a Windows based container running on Linux and vice versa, due to the fact that containers share the same underlying kernel.

On a side note, having secure isolated environments gives us another benefit in which we are able to more closely manage our system’s resources. It allows us to monitor, for example, how much CPU, memory, and network resources each individual container is consuming.

Why Docker?

This truly goes back to Portability, its Light weight, and Speed, three topics we touched on briefly in the course of this article. It creates a simple way to move your application around, whether it be laptop to server, to cloud providers like AWS, Azure, etc. As a developer you gain a sense of freedom because you can create your application in a container, and know that you can run it ANYWHERE at ANY time without issues.

As our industry continues to evolve, DevOps integration with Networking will become the new norm. It is our responsibility as Network Engineers to stay on top of these new and maybe older technologies (Docker) so we can be more efficient, and of course continue to provide our clients with the best service possible. The information in this blog is barely scratching the surface of the many capabilities Docker Containers provide us, and I encourage the reader to take a deeper look into it. I believe this technology is here to stay and will become a highly reputable name in I.T.

Thank you for viewing this post. I hope this has been informative for you and as always, if you have any questions and would like to schedule a free consultation with us, please feel free to reach out to us at sales@lookingpoint.com and we’ll be happy to help!

Will Panameno, Network Engineer