This past week I have been working on a new campus build-out for one of our customers and came across some interesting behavior regarding 100Gbps and 25Gbps uplinks between switches.

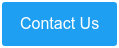

A simplified view of the setup in question is shown below:

We had a pair of Nexus 9000 (deployed in a VPC) which would be utilized for infrastructure connectivity. A pair of Catalyst 9500 (clustered with StackWise Virtual) fulfilling our aggregation layer role. Finally, we have Catalyst 9300 switch stacks for endpoint connectivity.

Uplinks between the Aggregation (Cat9500) and Access (Cat9300) would operate at 25Gbps utilizing the Cisco “SFP-25G-SR-S” transceivers with multimode OM4 cables. The design called for two uplinks to each of our access stacks providing 50Gbps of aggregate bandwidth. No more STP blocking thanks to clustering with StackWise virtual. Sweet!

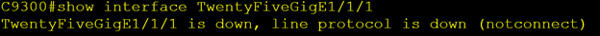

So, after configuring the uplinks I installed and cabled the transceivers, and much to my surprise the links remained hard down/down.

I went through my normal list of troubleshooting steps.

- Double checked config on both ends

- Confirmed ports where not in an admin down state

- Swapped the fiber TX/RX on one end of the connection

- Hard set the port speed and disabled negotiation

- Replaced fiber cable

- Replaced Transceivers

Still layer 1 remained hard down ☹

Next, I tried looping the 25Gb link on the Cat9300. And much to my surprise the link instantly came straight up. The same was true when I repeated the test on the Cat9500. What was going on here? Both switches are from the same family running the same recommended code version 16.9.4, but when I try to connect them at 25G they refused to talk to each other.

I was left scratching my head. And my googling was not coming up with any helpful results. That’s when I remembered a conversation from many moons ago that I had with a colleague of mine (Big shout out to Trevor Butler a.k.a T-bone) who described a similar issue. A quick call to Trevor and I had my answer.

Forward Error Correction (FEC).

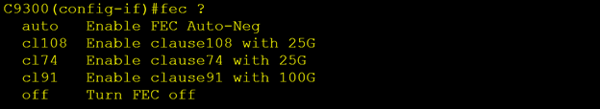

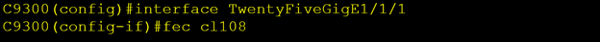

This is a new command to me, so I checked out what options were available under each of the uplink ports:

Both of my Catalysts had the default setting of “fec auto”. After trying a few different combinations, I hit a winner with “fec cl108” configured on both ends the link came up.

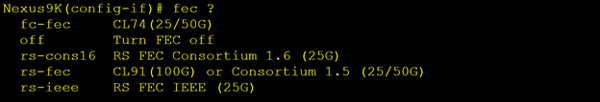

Having solved my 25Gig uplink issue I moved onto my 100Gig cross connect between the Nexus 9K and Cat9500. Once again, I hit a similar issue where 100Gig connections between my Cat9500 and Nexus 9K worked fine, but when I connected 100Gig between the two different switching families the links would not come up.

Once again FEC auto negotiation was the culprit. The options available under the Nexus interface were different to what I had seen on the Catalyst.

The magic combination that worked this time, was to disable FEC on both ends of the link.

So, in summary the next time you are connecting equipment at 25 or 100Gig and the link will not come up, I recommend you check out what FEC’ing options are available on both ends of the link. Then hard set them to match. Hopefully FEC auto negotiation will work across vendors and platforms, but until that happens hard setting is our only option.

As always if you have any questions on the best routing protocols for you and your business and would like to schedule a free consultation with us, please reach out to us at sales@lookingpoint.com and we’ll be happy to help!

Chris Marshall, Senior Solutions Architect - CCIE#29940